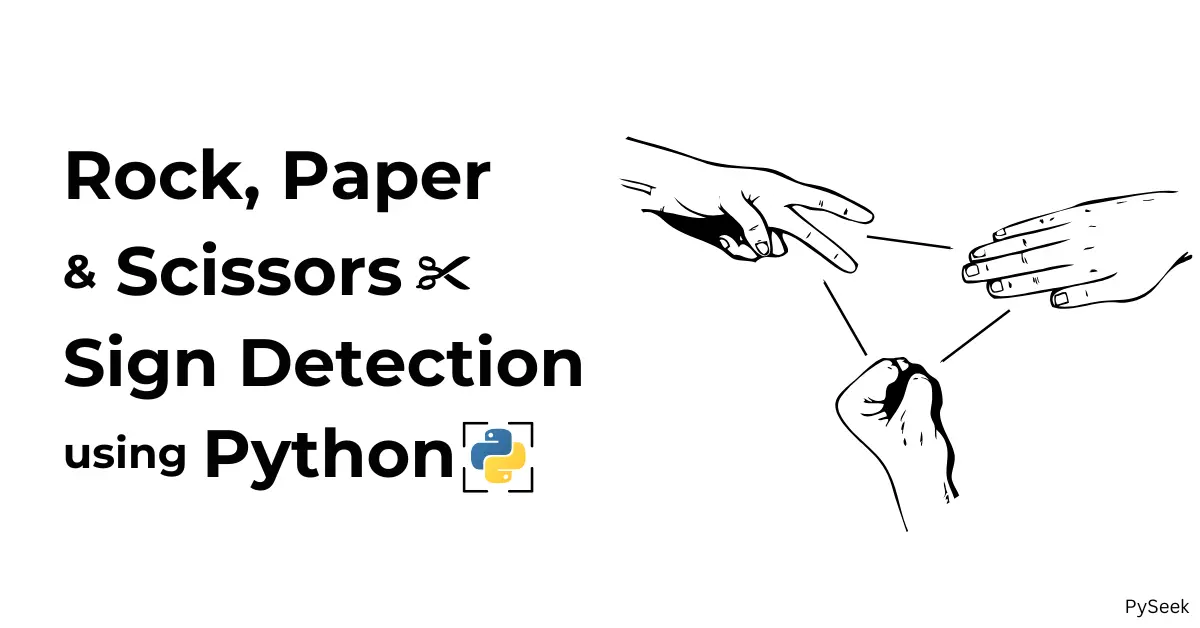

Imagine you’re playing rock-paper-scissors with your computer, where your hand gestures are recognized through your webcam in real-time, how is it? With advancements in machine learning, specifically using YOLOv8, you can easily implement this hand gesture recognition project in Python.

In this article, we’ll walk through the entire process of setting up the project, including training a model to recognize hand gestures like rock, paper, and scissors, using a custom dataset.

If you’re curious about using Python for real-time gesture recognition or want to create a fun, interactive project, this tutorial is for you!

Requirements

Before diving into the code, let’s ensure Python (3.6 or later) is installed on your computer. If you don’t have Python, you can download it for free from https://www.python.org/downloads/.

Now download all the dependencies we require using the following commands:

pip install gitpython>=3.1.30 pip install matplotlib>=3.3 pip install numpy>=1.23.5 pip install opencv-python>=4.1.1 pip install pillow>=10.3.0 pip install psutil pip install PyYAML>=5.3.1 pip install requests>=2.32.0 pip install scipy>=1.4.1 pip install thop>=0.1.1 pip install torch>=1.8.0 pip install torchvision>=0.9.0 pip install tqdm>=4.64.0 pip install ultralytics>=8.2.34 pip install pandas>=1.1.4 pip install seaborn>=0.11.0 pip install setuptools>=65.5.1 pip install filterpy pip install scikit-image pip install lap

Alternative Installation

Installing the above utilities one by one might be a boring task. Instead, you can download the ‘requirements.txt‘ file containing all the dependencies above. Simply run the following command. It will automate the whole task in one go.

pip install -r requirements.txt

Training of YOLO Model on Custom Dataset

At the very first, we have to train our YOLO model. Please follow the steps below:

Download the Dataset

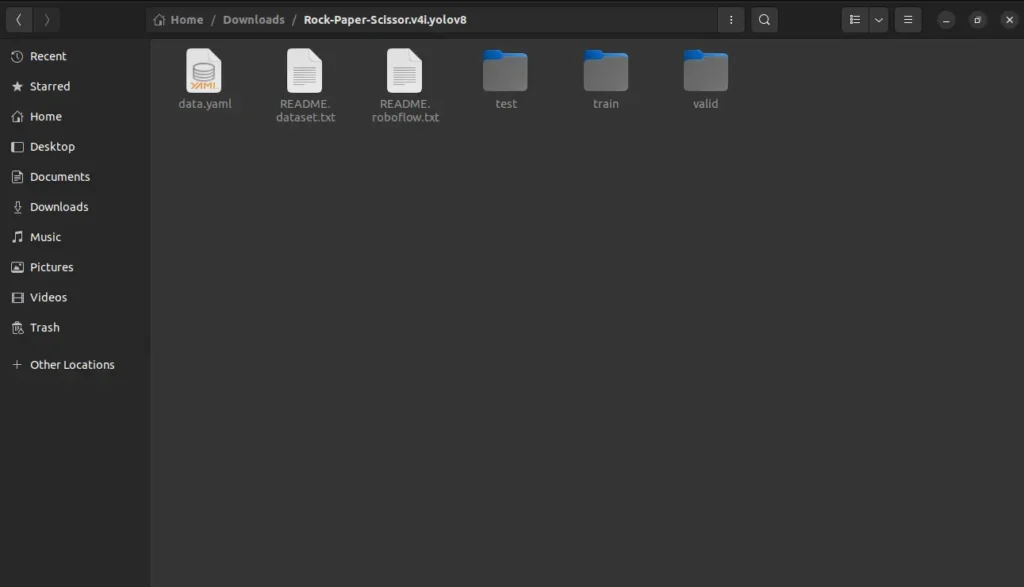

Download the rock-paper-scissor dataset from roboflow.com.

Now unzip the downloaded dataset. The folder should look like the following:

Training YOLOv8 Model with Custom Dataset using Colab

Open Google Colab, sign in with your Gmail account and open a new notebook.

Now go to the ‘Runtime‘ menu, select ‘Change runtime type‘, choose ‘T4 GPU‘ for the Hardware accelerator, and save it.

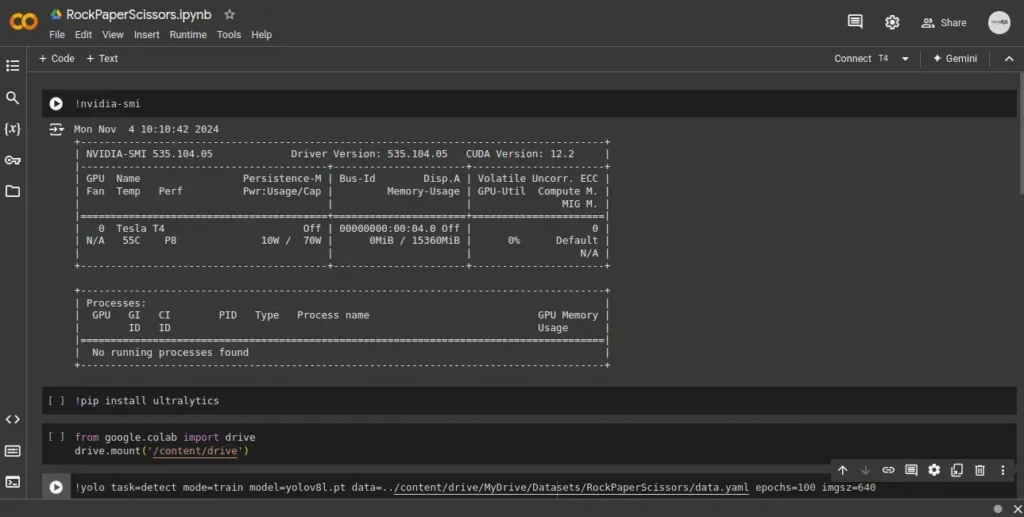

Let’s check whether the GPU is running perfectly or not using the following command:

!nvidia-smi

The output should look like the following:

Next, install ultralytics on your colab workspace using the following command:

!pip install ultralytics

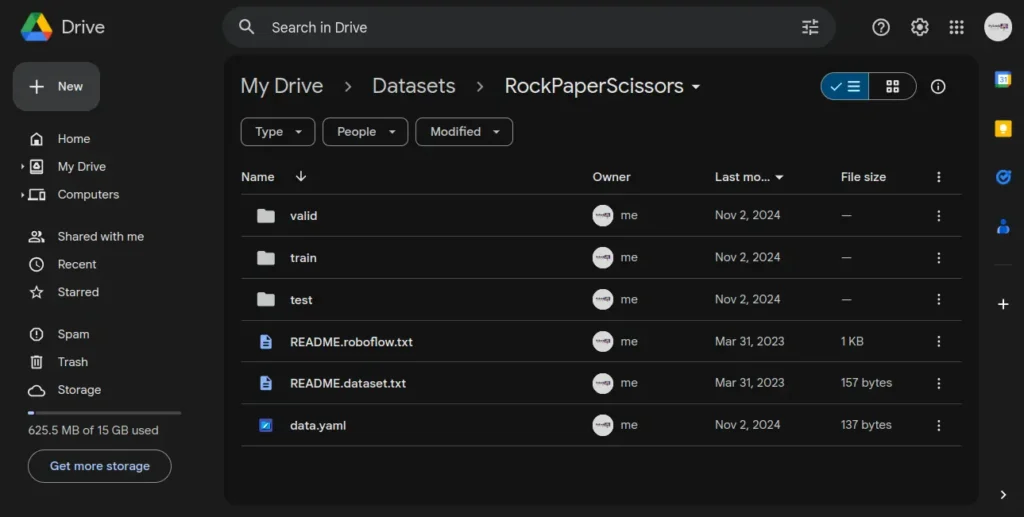

Now open your Google Drive and navigate to ‘My Drive.’ Now create a folder named ‘Datasets‘ under ‘My Drive’ and inside the ‘Datasets’ folder create one more folder ‘RockPaperScissors.’

Let’s open the unzipped dataset folder, select all items present there, and drop them into the ‘RockPaperScissors’ folder on Google Drive. It may take a while so wait until it is finished. The final ‘RockPaperScissors’ folder will look like the following:

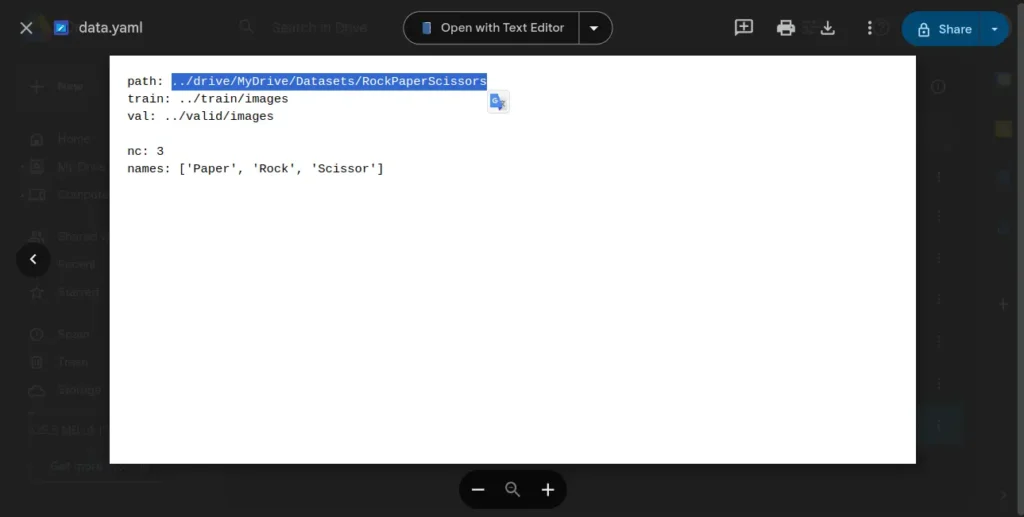

Now open the ‘data.yaml‘ file in the text editor and modify the path variable to: “../drive/MyDrive/Datasets/RockPaperScissors” The final ‘data.yaml‘ file will look like the following:

Now, let’s go back to our Google Colab dashboard. You need to mount your Google Drive with the Colab. Insert the following command in a new cell and run it:

from google.colab import drive

drive.mount('/content/drive')

You should get a success message like this: “Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount(“/content/drive”, force_remount=True).”

Now we will start training our YOLO model with our custom dataset. Again, create a new cell, insert the command below, and run it.

!yolo task=detect mode=train model=yolov8l.pt data=../content/drive/MyDrive/Datasets/RockPaperScissors/data.yaml epochs=100 imgsz=640

Here, ‘epochs=100‘ specifies the number of training epochs. An epoch is one complete pass through the entire training dataset. Here, the model will be trained for 100 epochs.

‘imgsz=640‘ sets the size of the input images on which the model will be trained. In this case, images will be resized to 640×640 pixels before being fed into the model.

The whole training can take around 1 – 2 hours even more to complete.

After the completion of the training, go to the ‘Files‘ section in your Colab dashboard and navigate through these folders: ‘runs’ -> ‘detect’ -> ‘train’ -> ‘weights’. Inside the ‘weights‘ folder you will see ‘best.pt‘ and ‘last.pt‘ these two files. Download ‘best.pt‘ from there.

Check out our article on Google Colab for Python: Advantages vs Disadvantages

Setting Up the Environment

For this project, create a separate folder named “RockPaperScissors.” Under this folder, create another folder named ‘Weights‘ to store the pre-trained YOLO model.

Place the Downloaded YOLO Model

In the previous section, we trained our YOLO model with a custom dataset and downloaded a file named ‘best.pt.’ Now place this file inside the ‘Weights’ folder.

Create Your Python Script

We’re almost at the end of setting up the environment. Now choose your favorite text editor and open the entire project folder ‘RockPaperScissors.’ Inside this folder, create a Python program file named ‘rock_paper-scissors.py.‘ This is where you’ll write the code.

Your final project file hierarchy should look like the following:

RockPaperScissors/ ├── Weights/ │ └── best.pt ├── rock_paper_scissors.py

The Program

Here’s the complete Python program that uses YOLOv8 to detect rock-paper-scissors gestures via your webcam. The program captures live video, performs object detection on each frame, and identifies gestures with confidence scores.

import cv2

import math

import cvzone

import threading

from ultralytics import YOLO

# Load YOLO model with custom weights

yolo_model = YOLO("Weights/best.pt")

# Define class names

class_labels = ['paper', 'rock', 'scissor']

frame = None

def capture_video(video_capture):

global frame

while True:

success, img = video_capture.read()

if success:

frame = img

# For number detection through webcam

video_capture = cv2.VideoCapture(0)

# Start the video capture in a separate thread

capture_thread = threading.Thread(target=capture_video, args=(video_capture,))

capture_thread.daemon = True

capture_thread.start()

while True:

# Perform object detection

results = yolo_model(frame)

for r in results:

boxes = r.boxes

for box in boxes:

x1, y1, x2, y2 = box.xyxy[0]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

w, h = x2 - x1, y2 - y1

conf = math.ceil((box.conf[0] * 100)) / 100

cls = int(box.cls[0])

if conf > 0.1:

cvzone.cornerRect(frame, (x1, y1, w, h), t=2)

cvzone.putTextRect(frame, f'{class_labels[cls]} {conf}', (x1, y1 - 10), scale=0.8, thickness=1, colorR=(255, 0, 0))

# Display the frame with detections

cv2.imshow("Image", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cv2.destroyAllWindows()

cv2.waitKey(1)

Explanation of the Code

- Loading the Model: We start by loading the YOLOv8 model with the custom-trained weights saved in Weights/best.pt. This model is trained specifically to detect rock, paper, and scissors gestures.

- Starting Video Capture: We set up the webcam for live video capture using OpenCV. To ensure smooth processing, the video capture runs in a separate thread.

- Running Object Detection: For each video frame, we use YOLOv8 to perform object detection. The model returns bounding boxes around detected gestures with confidence scores. We then draw these bounding boxes on the frame and label each detection with its class (rock, paper, or scissors) and confidence level.

- Displaying the Output: Each processed frame is displayed in a window. The program continuously captures video and updates the display until you press ‘q’ to exit.

Output

Summary

In this tutorial, we developed a rock-paper-scissors sign detection project using Python, YOLOv8, and OpenCV. We demonstrated how to use YOLOv8 for real-time hand gesture recognition in Python. This is a fantastic introduction to using YOLOv8 with custom datasets for specific object detection tasks.

Whether you’re interested in gesture recognition, machine learning, or real-time video processing, this project is a practical example of what’s possible with Python and YOLOv8.

Now you’re ready to take this project further! Try experimenting with more gestures or enhance the model to recognize complex hand signs. With the skills you’ve learned, there’s no limit to the applications you can create.

For any query related to this project, reach out to me at contact@pyseek.com.

Recommended Article: Create a Finger Counter Using Python, OpenCV & Mediapipe

Happy Coding!